From the original article on September 2, 2017. Author: Scott Locklin.

Useful journalism about technology is virtually nonexistent in the present day. It is a fact little commented on, but easily understood. In some not too distant past, there were actually competent science and technology journalists who were paid to be good at their jobs. There are still science and technology journalists, but for the most part, there are no competent ones actually investigating things. The wretches we have now mostly assist with press releases. Everyone capable of doing such work well is either too busy, too well paid doing something else, or too cowardly to speak up and notice the emperor has no clothes.

Consider: there are now 5 PR people for every reporter in America. Reporters are an endangered species. Even the most ethical and well intentioned PR people are supposed to put the happy face on the soap powder, but when they don’t understand a technology, outright deception is inevitable. Modern “reporters” mostly regurgitate what the PR person tells them without any quality control.

The lack of useful reporting is a difficulty presently confronting Western Civilization as a whole; the examples are obvious and not worth enumerating. Competent full time reporters who are capable of actually debunking fraudulent tech PR bullshit and a mandate to do so: I estimate that there are approximately zero of these existing in these United States at the moment.

What happens when marketing people at a company talk to some engineers? Even the most honest marketing people hear what they want to hear, and try to spin it in the best possible way to win the PR war, and make their execs happy. Execs read the “news” which is basically marketing releases from their competitors. They think this is actual information, rather than someone else’s press release. Hell, I’ve even seen executives ask engineers for capabilities they heard about from reading their own marketing press releases, and being confused as to why these capabilities were actually science fiction. So, when your read some cool article in tech crunch on the latest woo, you aren’t actually reading anything real or accurate. You’re reading the result of a human informational centipede where a CEO orders a marketing guy to publish bullshit which is then consumed by decision makers who pay for investments in technology which doesn’t do what they think it does.

Machine learning and its relatives are the statistics of the future: the way we learn about the way the world works. Of course, machines aren’t actually “learning” anything. They’re just doing statistics. Very beautiful, complex, and sometimes mysterious statistics, but it’s still statistics. Nobody really knows how people learn things and infer new things from abstract or practical knowledge. When someone starts talking about “AI,” based on some machine learning technique, the Berzerker rage comes upon me. There is no such thing as “AI” as a science or a technology. Anyone who uses that phrase is a dreamer, a liar or a fool.

You can tell when a nebulous buzzword like “AI” has reached peak “human information centipede;” when oligarchs start being afraid of it. You have the famous example of Bill Joy being deathly afraid of “nanotech,” a previously hyped “technology” which persists in not existing in the corporeal world. Charlatan thinktanks like the “center for responsible nanotechnology” popped up to relieve oligarchs of their easy money, and these responsible nanotech assclowns went on to … post nifty articles on things that don’t exist.

These days, we have Elon Musk petrified that a near relative of logistic regression is going to achieve sentience and render him unable to enjoy the usufructs of his toils. Charlatan “thinktanks” dedicated to “friendly AI” (and Harry Potter slashfic) have sprung up. Goofball non-profits designed to make “AI” more “safe” by making it available as open source (think about that for a minute) actually exist. Funded, of course, by the paranoid oligarchs who would be better off reading a book, adjusting their exercise program or having their doctor adjust their meds.

Chemists used nanotech hype to drum up funding for research they were interested in. I don’t know of anything useful or interesting which came out of it, but in our declining civilization, I have no real problem with chemists using such swindles to improve their funding. Since there are few to no actual “AI” researchers existing in the world, I suppose the “OpenAI” institute will use their ill gotten gainz to fund machine learning researchers of some kind; maybe even something potentially useful. But, like the chemists, they’re just using it to fund things which are presently popular. How did the popular things get popular? The human information centipede, which is now touting deep reinforcement networks as the latest hotness.

My copy of Sutton and Barto was published in 1998. It’s a tremendous and interesting bunch of techniques, and the TD-gammon solution to Backgammon is a beautiful result for the ages. It is also nothing like “artificial intelligence.” No reinforcement learning gizmo is going to achieve sentience any more than an Unscented Kalman filter is going to achieve sentience. Neural approaches to reinforcement learning are among the least interesting applications of RL, mostly because it’s been done for so long. Why not use RL on other kinds of models? Example, this guy used Nash Equilibrium equations to build a pokerbot using RL. There are also interesting problems where RL with neural nets could be used successfully, and where an open source version would be valuable: natural language, anomaly detection. RL frameworks would also help matters. There are numerous other online approaches which are not reinforcement learning, but potentially even more interesting. No, no, we need to use RL to teach a neural net to play freaking vidya games and call it “AI.” I vaguely recall in the 1980s, when you needed to put a quarter into a machine to play vidya on an 8-bit CPU, the machines had pretty good “AI” which was able to eventually beat even the best players. Great work guys. You’ve worked really hard to do something which was doable in the 1980s.

The bot learned the game from scratch by self-play, and does not use imitation learning or tree search. This is a step towards building AI systems which accomplish well-defined goals in messy, complicated situations involving real humans.

No, you’ve basically just reproduced TD-gammon on a stupid video game. “AI systems which accomplish well-defined goals in messy … situations” need to have human-like judgment and use experience from unrelated tasks to do well at new tasks. This thing does nothing of the sort. This is a pedestrian exercise in what reinforcement learning is designed to do. The fact that it comes with accompanying marketing video (one which probably cost as much as a half year grad student salary, where it would have been better spent) ought to indicate what manner of “achievement” this is.

Unironic use of the word “AI” is a sure tell of dopey credulity, but the stupid is everywhere, unchecked and rampaging like the ending of Tetsuo the Iron Man.

Video: Tetsuo - The Iron Man ending

Imagine someone from smurftown took a data set relating spurious correlations in the periodic table of the elements to stock prices, ran k-means on it, and declared himself a hedge fund manager for beating the S&P by 10%. Would you be impressed? Would you you tout this in a public place? Well, somebody did, and it is the thing which finally caused me to chimp out. This is classic Price of Butter in Bangladesh stupid data mining tricks. Actually, price of butter in Bangladesh makes considerably more sense than this. At least butter prices are meaningful, unlike spurious periodic element correlations to stock returns.

This is so transparently absurd, I had thought it was a clever troll. So I looked around the rest of the website, and found a heart felt declaration that VC investments are not bets. Because VCs really caaaare, man. As if high rollers at the horse races never took an interest in the digestion of their favorite horses and superfluous flesh on their jockeys. Russians know what the phrase “VC” means (туалет). I suppose with this piece of information it still could be a clever Onionesque parody, but I have it on two degrees of Erdős and Kevin Bacon that the author of this piece is a real Venture Capitalist, and he’s not kidding. More recently how “Superintelligent AI will kick ass” and “please buy my stacked LSTMs because I said AI.” Further scrolling on the website reveals one of the organizers of OpenAI is also involved. So, I assume we’re supposed to take it seriously. I don’t; this website is unadulterated bullshit.

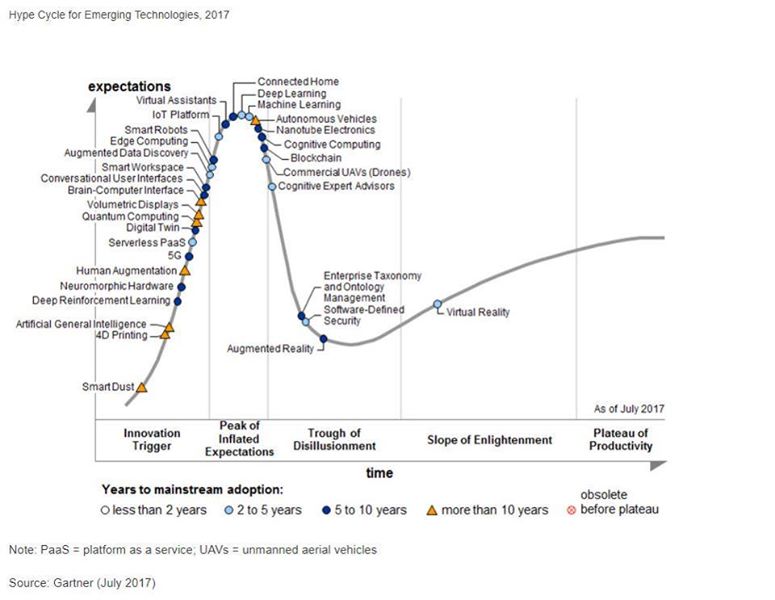

Gartner: they’re pretty good at spotting things which are +10 years away (aka probably never happen).

A winter is coming; another AI winter. Mostly because sharpers, incompetents and frauds are touting things which are not even vaguely true. This is tragic, as there has been some progress in machine learning and potentially lucrative and innovative companies based on it will never happen. As in the first AI winter, it’s because research is being driven by marketing departments and irresponsible people.

But hey, I’m just some bozo writing in his underpants, don’t listen to me, listen to some experts:

http://www.rogerschank.com/fraudulent-claims-made-by-IBM-about-Watson-and-AI

http://thinkingmachines.mit.edu/blog/unreasonable-reputation-neural-networks

https://medium.com/project-juno/how-to-avoid-another-ai-winter-d0915f1e4798#.uwo31nggc

https://www.researchgate.net/publication/3454567_Avoiding_Another_AI_Winter

Edit Add (Sept 5, 2017):

Congress is presently in hearings on “AI”. It’s worth remembering congress had hearings on “nanotech” in 2006.

http://www.nanotechproject.org/news/archive/congressional_hearing_on_nanotechnology/

By 2014, it is estimated that there could be $2.6 trillion worth of products in the global marketplace which have incorporated nanotechnology. There is significant concern in industry, however, that the projected economic growth of nanotechnology could be undermined by either real environmental and safety risks of nanotechnology or the public’s perception that such risks exist.

Edit Add (Sept 10, 2017) (Taken from Mark Ames):

Library of Chadnet | wiki.chadnet.org